What is a Graphical Processing Unit? (Explained Simply)

Think of your GPU as the unsung hero in your gaming rig, the Gandalf that shows up shouting “You shall not lag!” every time you launch a game. While your CPU is busy juggling emails, Discord calls, and the 47 Chrome tabs you swore you’d close, the GPU is laser‑focused on turning 1s and 0s into the beautiful frames that hit your monitor.

Here’s the no‑nonsense version:

- CPU = multitasking brain → Handles everything in order, one task at a time (like that mate who insists on doing the dishes before making a brew).

- GPU = specialist artist → Splits the job into thousands of mini‑tasks and blitzes them all at once (like an army of goblins cranking out pixels).

So, when you’re running around in Elden Ring or building your empire in Civilization VI, it’s the GPU doing the heavy lifting to make sure the dragons look fierce, the shadows look spooky, and your framerate doesn’t turn into a slideshow.

A light-hearted Gameplay Techy comic showing how GPUs act like a wizard and goblin army, building dragons frame by frame.

GPU vs CPU: What’s the Difference?

Let’s settle the age-old bar fight between these two silicon heavyweights.

- CPU (Central Processing Unit): The commander. It’s great at complex single tasks, making decisions, and keeping your PC from combusting when you’ve got Spotify, OBS, and a spreadsheet open all at once. Think of it as the guild leader who keeps everyone in line.

- GPU (Graphics Processing Unit): The berserker army. It doesn’t do politics, it just charges in with thousands of cores, swinging axes at pixels until your game looks smooth. Perfect for parallel tasks like rendering shadows, explosions, or that ridiculous number of sheep you trained in Age of Empires II.

In other words: CPUs are great at doing a few things really smartly, while GPUs are great at doing a ridiculous number of things all at once. Put them together, and you’ve got the brains and the brawn of your gaming setup.

How Does a GPU Render Frames?

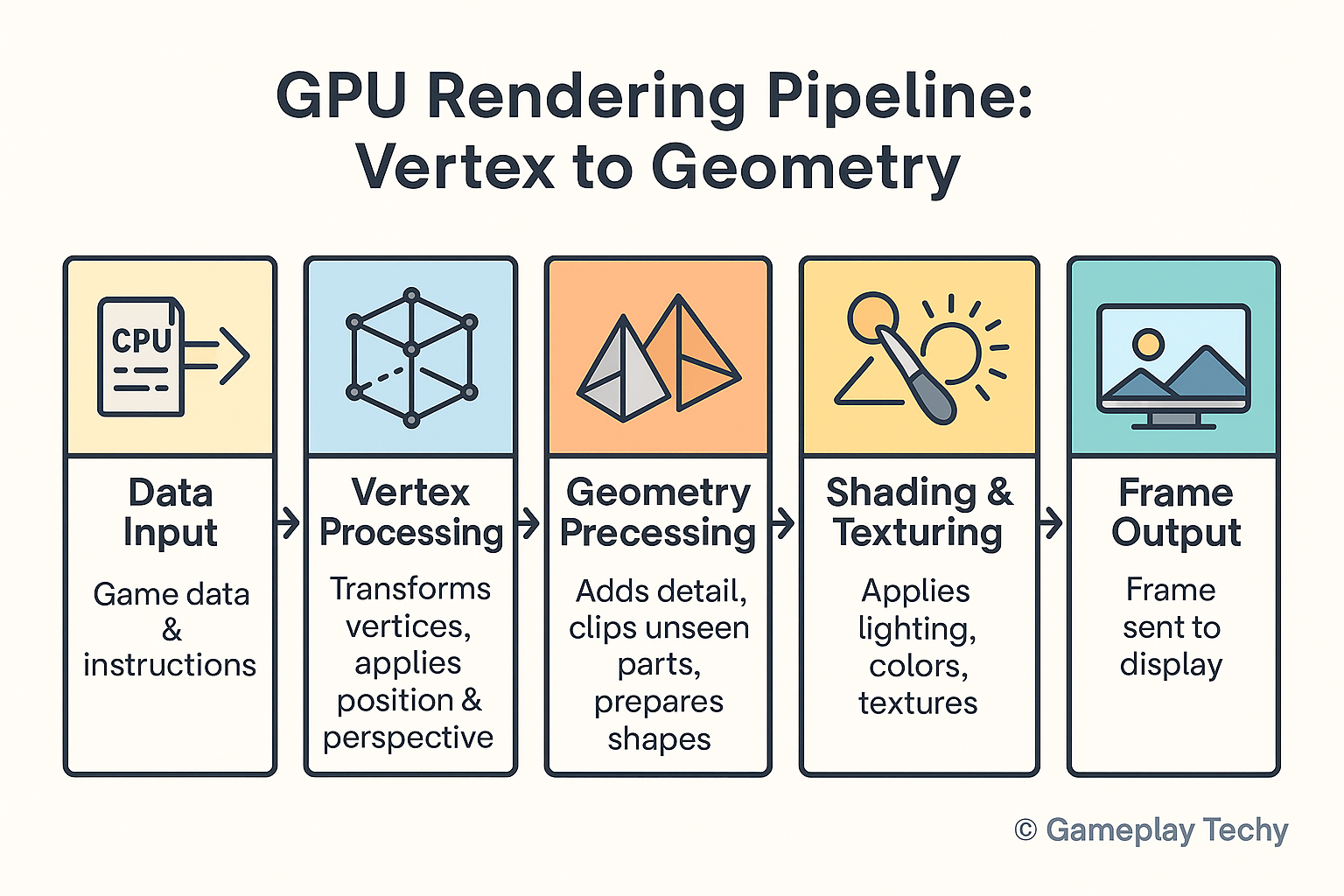

Every frame on your screen is basically a magic trick, and the GPU is the magician pulling it off hundreds of times per second. Here’s how the trick works

- Data Input: The CPU hands over instructions – where enemies are, where your sword is swinging, what textures need loading. Basically, it gives the GPU a shopping list.

2. Vertex Processing: The GPU plots out 3D shapes and positions them in the game world, like setting the stage props before the curtain rises.

3. Rasterization : This is where 3D becomes 2D. The GPU flattens the world into pixels that your monitor can understand, kind of like pressing a LEGO castle into a painting.

4. Shading & Texturing: Now the fun begins. Lighting, shadows, colors, and textures get slapped on, turning wireframe skeletons into dragons, forests, or your character’s shiny new armor.

5. Frame Output: Once dressed up, the finished frame gets sent to your monitor. And then the GPU immediately starts working on the next one. Repeat this process 60, 120, or even 240 times a second.

That’s why when your GPU struggles, the whole illusion collapses, dragons stutter, shadows glitch, and you end up with what feels like a PowerPoint presentation instead of smooth gameplay.

An easy-to-follow flowchart that explains how GPUs transform raw data into finished frames on your screen.

Why VRAM Matters (and How Much You Need in 2025)

VRAM (Video RAM) is your GPU’s personal stash of Red Bull and snacks. It’s the quick-access memory where all the textures, shaders, and assets live so your GPU doesn’t have to run back and forth to slower system RAM every two seconds.

The more detailed the game, the more VRAM it gulps down. High-res textures, massive open worlds, ray-traced reflections, all of these chew through memory like it’s an all-you-can-eat buffet.

Here’s the 2025 cheat sheet:

- 4GB VRAM: Budget/entry-level. Great for indie games, older titles, and 1080p on low settings. But don’t expect miracles.

- 8GB VRAM: The sweet spot for most gamers at 1080p/1440p. Think of it as the balanced diet, enough for AAA titles without breaking the bank.

- 12GB+ VRAM: The heavyweight tier. Perfect for 4K gaming, ray tracing, AI workloads, or video editing without your GPU wheezing like an old PS2 fan.

⚠️ Pro tip: Having more VRAM doesn’t automatically mean better performance. It only helps if your game or workload actually uses it. Otherwise, it’s like carrying a giant backpack to the corner shop, impressive, but unnecessary.

GPUs for Gaming vs Productivity (Editing, AI, etc.)

Not all GPUs are raised in the same guild. Some are trained to battle dragons, while others are built to craft worlds behind the scenes.

- Gaming GPUs: These are tuned for speed and frame output. Their job is to deliver smooth gameplay, high FPS, and crisp visuals. Think of them as gladiators in the arena, fast, flashy, and built for combat.

- Productivity GPUs: These are tuned for raw computing muscle. They’re the blacksmiths and architects, perfect for video editing, 3D rendering, CAD, and AI training. They don’t care about how cool explosions look; they care about finishing the job with precision.

The catch? You can game on a productivity GPU and edit on a gaming one, but you won’t get the best performance. It’s like sending a knight to do a carpenter’s job, possible, but not efficient.

Final Thoughts: Is More GPU Always Better?

Getting the biggest, strongest, most shredded GPU behemoth isn’t always the first port of call. As I have said before, you must always buy for your needs and it’s a waste if you’re not using the hardware to its the fullest potential. If all your doing is just playing World of Warcraft on your orc main killing alliance softies all the day…ahem! Then a high spec GPU is overkill. You could save your pocket and spend the money on something else. A more powerful CPU, a better-looking case or even a kebab!

Ask yourself:

- What resolution am I playing at? 1080p, 1440p, or 4K?

- What’s my refresh rate? 60Hz, 144Hz, or higher?

- What’s my main workload? Gaming, editing, AI, killing night elves or a mix?

- Do my CPU and RAM keep up with the GPU? No point pairing a dragon-slayer with a rusty sword.

The truth: a balanced build (CPU, GPU, RAM, storage) will almost always outperform a lopsided one. Sometimes “good enough” really is, because overspending on unused GPU power is like buying a Ferrari just to do school runs.

Bottom line: Your GPU works by splitting mountains of data into tiny parallel tasks, crunching them fast, and painting the frames you see. But in 2025, the smart move isn’t always “more power” it’s the right power for the job. Because with great power, comes greater electricity bills.

Want to dive deeper and understand how your CPU works? Or maybe you’re shopping around and need a buying guide for the latest Ryzen CPUs or Intel chips? Don’t worry – Gameplay Techy has you covered with simple, gamer-friendly guides that cut through the jargon and tell you exactly what you need to know.

Pair this GPU knowledge (Pssst click here if your on a budget!) with the right CPU, and you’ll have a balanced build that won’t just survive 2025, it’ll dominate it.